J. Cent. South Univ. (2012) 19: 522-526

DOI: 10.1007/s11771-012-1035-0![]()

Recognition algorithm for turn light of front vehicle

LI Yi(李仪), CAI Zi-xing(蔡自兴), TANG Jin(唐琎)

School of Information Science and Engineering, Central South University, Changsha 410083, China

? Central South University Press and Springer-Verlag Berlin Heidelberg 2012

Abstract:

Intelligent vehicle needs the turn light information of front vehicles to make decisions in autonomous navigation. A recognition algorithm was designed to get information of turn light. Approximated center segmentation method was designed to divide the front vehicle image into two parts by using geometry information. The number of remained pixels of vehicle image which was filtered by the morphologic features was got by adaptive threshold method, and it was applied to recognizing the lights flashing. The experimental results show that the algorithm can not only distinguish the two turn lights of vehicle but also recognize the information of them. The algorithm is quiet effective, robust and satisfactory in real-time performance.

Key words:

intelligent vehicle; turn light recognition; adaptive threshold; front vehicle;

1 Introduction

The perception of the external environment of intelligent vehicle is the core of the intelligent system. This perception involves a number of aspects, for instance, obstacle detection, road recognition, vehicle detection and tracking [1-2]. In autonomous navigation, intelligent vehicle needs to make some decisions, such as steering, braking and acceleration. In order to obtain the correct result, it needs to know elements like its own states and the information of the external environment. One element is the behavior of front vehicle. For example, in the process of overtaking, intelligent vehicle must know whether the front vehicle has the intention to change lines, which is shown by turn light. So, it is significant to recognize the turn light of front vehicles.

In Ref. [3], a vehicle tail light detection algorithm is proposed, and it is based on the analysis of the texture of vehicle tail lamp area. In Ref. [4], a fast vehicle light locating method based on vehicle light characteristics and horizontal gray level difference projection was provided. In Refs. [5-7], a box bounding pair of vehicle head light candidates are examined. These methods only locate the vehicle light, but cannot recognize it. In Refs. [8-9], a turn light recognition is proposed, but it depends on the location of the turn light of vehicle too much. In Refs. [10-12], global feature extraction and description were described.

A real-time turn light recognition method is introduced to solve these problems in this work. At first, framework is built and data flow analysis is carried out. Secondly, to differentiate the left or right turn light, a vehicle real center division method is provided. Thirdly, to obtain the information of turn light, an adaptive threshold algorithm is designed. Finally, the whole algorithm is implemented to do the experiments under several different circumstances.

2 System design of turn light recognition

2.1 Turn light recognition framework

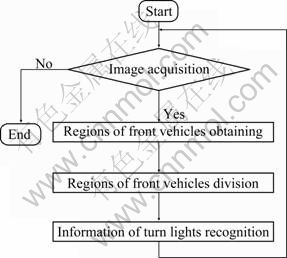

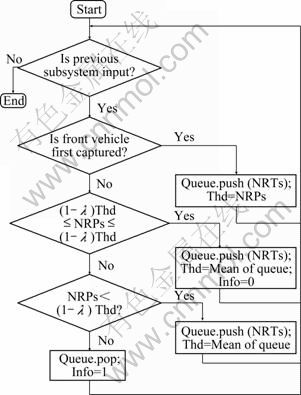

In the framework, the first step is image acquisition through the camera; if the image is got, then it goes to the next step; if not, it goes to the end. The second step is obtaining the range of front vehicles by the vehicle detection subsystem. The third step is that vehicle image is divided into two parts, in accordance with vehicle location in the image, with the left turn light on the left part and the right turn light on the right. The final step is that the two parts of image are processed by color space converting and de-noise, and then an adaptive threshold method is used to find out whether the turn light is on. The framework of turn light recognition is shown in Fig. 1.

2.2 Data flow of turn light recognition

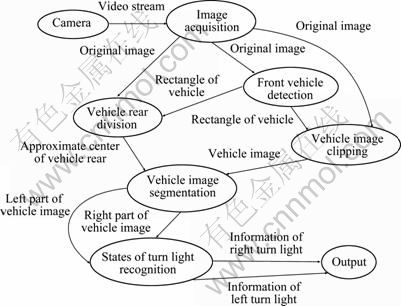

The data flow analysis is shown in Fig. 2. The video stream obtained from camera is converted into original image by image acquisition processing. The rectangles of vehicle from front vehicle detection processing and original image are combined, thus vehicle image is obtained by vehicle image clip processing. Vehicle rear division processing then converts rectangle of vehicle and original image into approximate center of vehicle rear. Vehicle image is divided into two parts by vehicle image segmentation with approximate center of vehicle rear. One part is the left part of vehicle image, and the other is the right part. As the inputs of the state of turn light recognition processing, the two parts are processed and the information of them becomes the outputs, which are integrated by output processing. Finally, the information is integrated by output processing. The front vehicle detection can be realized by the method in Ref.[13], and in Fig. 2, it is obvious that there are two emphasized steps: one is vehicle rear division, and the other is turn light recognition.

Fig. 1 Turn light recognition framework

3 Approximate center of front vehicle division

The location of turn light of vehicle is different in vehicle model. If only the lamp is detected, maybe it has failed. A method is proposed with lack of distance between front vehicle and intelligent vehicle. The main idea of it is based on the approximate center of front vehicle rear to divide front vehicle image into two parts, with the left turn light on the left part of vehicle image, and the right turn light on the other part.

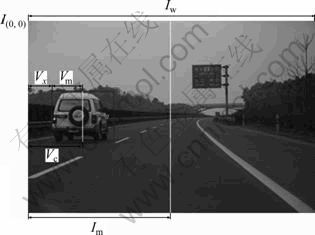

In Fig. 3, I(0,0) means the coordinate origin and is located at the left-top of the image. Iw is the whole width of view image, and Im is a half of Iw. Vw is width of the rectangle of the vehicle in view image. Vm is half of Vw. Vx is the distance on x-axis from the rectangle of vehicle to I(0,0). Vc is defined as the distance on x-axis from the approximate center of vehicle rear to I(0,0). According to Vc, the vehicle image can be divided into two parts. So, where the Vc is will be the critical problem.

Three extreme situations are discussed in this work: 1) The whole vehicle just appears in the left side of the view, then Vx=0, Vc=ε1 (ε1>0). 2) The whole vehicle just appears in the right side of the view, then Vx=2Im-2Vm, Vc= Iw-ε2 (ε2>0). 3) The vehicle is just in the middle of the view, then Vx=Im-Vm, Vc=Im.

According to the first situation (Vx=0, Vc=ε1) and the third situation (Vx = Im-Vm, Vc=Im), the linear formula is got:

![]() (1)

(1)

Then Vx=2Im-2Vm is put into Eq. (1), and there is

![]() (2)

(2)

Fig. 2 Data flow of turn light recognition

Fig. 3 Diagram of approximate center

It is obvious that ε1 equals ε2 for the same vehicle. So, the conclusion is drawn: when Vm is fixed, Vc is linear with Vx. The equation is shown as

![]() (3)

(3)

In Eq. (3), Vx![]() [0, 2Im-2Vm], ε is a constant and ε>0.

[0, 2Im-2Vm], ε is a constant and ε>0.

In practical application, Vm changes with the distance between intelligent vehicle and front vehicle, and the result of the division of front vehicle rear is approximate, but it is satisfactory of requirement.

4 Information of turn light recognition

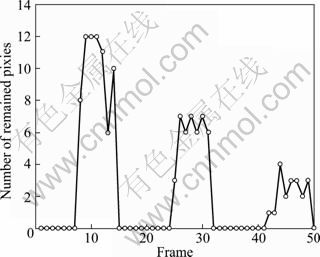

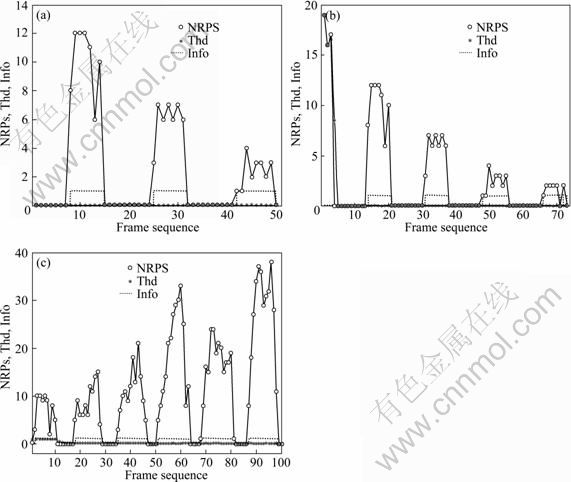

At first, the initiation is done to obtain the information of turn light. The parts of vehicle image are converted from RGB color space into HSV color space [14]. Then, values are set to H-component, S-component and V-component separately. The pixels are filtered by the value of the three components. If turn light is on, the pixels of turn light will be preserved. Because the process of the flashing is like “off-on-off”, the number of remained pixels (NRPs) would be a waveform, as shown in Fig. 4, indicating a process in which the object vehicle is farther and farther from intelligent vehicle.

Because it is hard to set values for the three components, the pixels of noise are also preserved. An adaptive threshold algorithm is designed, which processes the waveform and gets the information of turn light. The flowchart of the algorithm is shown in Fig. 5.

In Fig. 5, “Queue.push” means to push a value in queue; “Queue.pop” means to pop a value from queue; “Info” means the information of turn light, and the value of “Info” is 1 when the turn light is on, and 0 when it is off; “Thd” means threshold which is used to divide “NRPs” into two parts, and to determine the value of “Info”. λ is a numeric constant, and it is calculated at initiation process.

Fig. 4 NRPs-frame sequence diagram

Fig. 5 Flowchart of adaptive threshold algorithm

5 Implementation

The whole algorithm is implemented with OpenCv library [15] and C++ language, and experiments are done under several different circumstances.

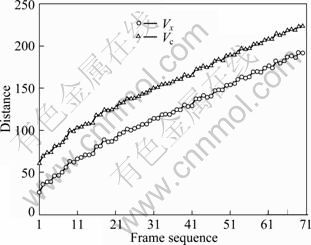

For vehicle rear division, the parameters are set as: 25

Figure 7 shows that the results of division are satisfactory to the requirement. Although declination appears in the first frame, the results become better and better in later frames.

Fig. 6 Vc- and Vx-frame sequence diagram

The next step is to recognize the left and the right parts of the image separately by λ=0.1. Figure 8 show the changes of NRPs, Thd, Info and the results based on the changes.

Figure 8(a) describes the process of a front vehicle getting farther and farther from intelligent vehicle while it enters the view with the turn light flashing. And there is no noise point after the filtering. Figure 8(b) also describes the same process as that in Fig. 8(a), yet there are noise points after the filtering. Figure 8(c) describes the process of a front vehicle becoming nearer and nearer while there are noise points after filtering. Figures 8(a), (b) and (c) indicate that the recognition results are reliable, and the turn light information of front vehicle can be recognized in real time.

Fig. 7 Result of approximate division of vehicle rear

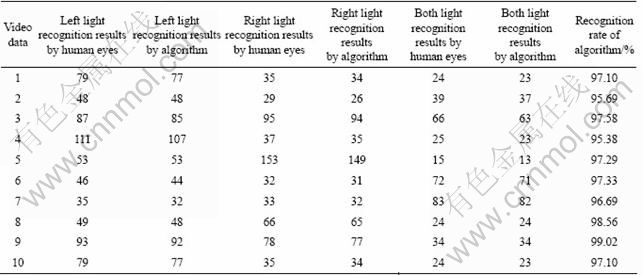

The experiments results are listed in Table 1.

Fig. 8 Results of turn light recognition

Table 1 Recognition rate for adaptive threshold algorithm

6 Conclusions

1) The framework of turn light recognition is built, and the process of data flow is analyzed.

2) The two most important processes of framework are designed: one is capable of distinguishing between the two sides of the turn light, and the other is information of turn light recognition.

3) Based on the previous analysis, the center of front vehicle rear is approximated linearly changed with the position of it at view image in lack of distance between the front vehicle and intelligent vehicle.

4) The whole algorithm is realized by using C++ with OpenCV library, and the results show that the method is able to identify the state of turn light in real time, and has de-noising capacity.

5) The system has been applied to intelligent vehicle. Through the experiment, it shows that it has good real-time characteristic, efficiency and robustness.

References

[1] JONES W. Keeping cars from crashing [J]. IEEE Spectrum, 2001, 38(9): 40-45.

[2] XU You-chun, WANG Hong-ben, LI Bin. A summary of worldwide intelligent vehicle [J]. Automotive Engineering, 2001, 23(5): 289- 295. (in Chinese)

[3] XU Yuan-ang. Research on license plate location and tail lamp area extraction methods [D]. Xi'an: Xidian University, 2008. (in Chinese)

[4] TONG Jian-jun, ZOU Fu-ming. Speed measurement of vehicle by video image [J]. Journal of Image and Graphics, 2005, 10(2): 192- 196. (in Chinese)

[5] WU Bing-fei, CHEN Yen-lin. Real-time image segmentation and rule-based reasoning for vehicle head light detection on a moving vehicle [C]// 7th IASTED International Conference on Signal & Image Processing. Anaheim: ACTA, 2005: 388-393.

[6] EICHNER M L, BRECKON T P. Real-time video analysis for vehicle lights detection using temporal information [C]// 4th European Conference on Visual Media Production (CVMP 2007). London: EIT, 2007: 1-1.

[7] TECNICO I S, de TELECOMUN I, PORTUGAL L. Car recognition based on back lights and rear view features [C]// 10th Workshop on Image Analysis for Multimedia Interactive Services. London: IEEE, 2009: 137-140.

[8] YU Yang-tao. Vehicle turn light recognition based vision [D]. Kunming: Yunnan Normal University, 2006. (in Chinese)

[9] BERTOZZI M, BROGGI A, CELLARIO M. Artificial vision in road vehicles [J]. IEEE-Special issue on Technology and Touls for Visual Perception, 2002, 90(7): 1258-1271.

[10] ZHANG Hong-liang, ZOU Zhong, LI Jie, CHEN Xiang-tao. Flame image recognition of alumina rotary kiln by artificial neural network and support vector machine methods [J]. Journal of Central South University of Technology, 2008, 15(1): 39-43.

[11] CAI Zi-xing, HE Han-gen, CHEN Hong. Theories and methods for mobile robots navigation under unknown environments [M]. Beijing: Science Press, 2009: 25-46. (in Chinese)

[12] ZHANG Gang, MA Zong-min DENG Li-guo, XU Chang-min. Novel histogram descriptor for global feature extraction and description [J]. Journal of Central South University of Technology, 2010, 17(3): 580-586.

[13] SUN Ze-hang, BEBIS G, MILLER R. Monocular precrash vehicle detection: Features and classifiers [J]. IEEE Transactions on Image Processing, 2006, 15(7): 2019-2034.

[14] GONZALEZ. Digital image processing [M]. 2nd Ed. Beijing: Publishing House of Electronics Industry, 2008: 237-238. (in Chinese)

[15] YU Shi-qi. OpenCv reference manual [EB/OL]. [2010-03-10]. http://www.opencv.org.cn/index.php/Template:Doc

(Edited by YANG Bing)

Foundation item: Projects(90820302, 60805027) supported by the National Natural Science Foundation of China; Project(200805330005) supported by the PhD Programs Foundation of Ministry of Education of China; Project(20010FJ4030) supported by the Academician Foundation of Hunan Province, China

Received date: 2011-01-30; Accepted date: 2011-06-28

Corresponding author: LI Yi, PhD Candidate; Tel: +86-731-82655993; E-mail: liyi1002@csu.edu.cn

Abstract: Intelligent vehicle needs the turn light information of front vehicles to make decisions in autonomous navigation. A recognition algorithm was designed to get information of turn light. Approximated center segmentation method was designed to divide the front vehicle image into two parts by using geometry information. The number of remained pixels of vehicle image which was filtered by the morphologic features was got by adaptive threshold method, and it was applied to recognizing the lights flashing. The experimental results show that the algorithm can not only distinguish the two turn lights of vehicle but also recognize the information of them. The algorithm is quiet effective, robust and satisfactory in real-time performance.