Why do they ask? An exploratory study of crowd discussions about Android application programming interface in stack overflow

来源期刊:中南大学学报(英文版)2019年第9期

论文作者:王涛 范强 杨程 尹刚 余跃 王怀民

文章页码:2432 - 2446

Key words:API documentation; Android; online survey; Stack Overflow

Abstract: Nowadays, more and more Android developers prefer to seek help from Q&A website like Stack Overflow, despite the rich official documentation. Several researches have studied the limitations of the official application programming interface (API) documentations and proposed approaches to improve them. However, few of them digged into the requirements of the third-party developers to study this. In this work, we gain insight into this question from multidimensional perspectives of API developers and API users by a kind of cross-validation. We propose a hybrid approach, which combines manual inspection on artifacts and online survey on corresponding developers, to explore the different focus between these two types of stakeholders. In our work, we manually inspect 1000 posts and receive 319 questionnaires in total. Through the mutual verification of the inspection and survey process, we found that the users are more concerned with the usage of API, while the official documentation mainly provides functional description. Furthermore, we identified 9 flaws of the official documentation and summarized 12 aspects (from the content to the representation) for promotion to improve the official API documentations.

Cite this article as: FAN Qiang, WANG Tao, YANG Cheng, YIN Gang, YU Yue, WANG Huai-min. Why do they ask? An exploratory study of crowd discussions about android api in stack overflow [J]. Journal of Central South University, 2019, 26(9): 2432-2446. DOI: https://doi.org/10.1007/s11771-019-4185-5.

J. Cent. South Univ. (2019) 26: 2432-2446

DOI: https://doi.org/10.1007/s11771-019-4185-5

FAN Qiang(范强), WANG Tao(王涛), YANG Cheng(杨程),YIN Gang(尹刚), YU Yue(余跃), WANG Huai-min(王怀民)

National Laboratory for Parallel and Distributed Processing, College of Computer Changsha,Changsha 410073, China

Central South University Press and Springer-Verlag GmbH Germany, part of Springer Nature 2019

Central South University Press and Springer-Verlag GmbH Germany, part of Springer Nature 2019

Abstract: Nowadays, more and more Android developers prefer to seek help from Q&A website like Stack Overflow, despite the rich official documentation. Several researches have studied the limitations of the official application programming interface (API) documentations and proposed approaches to improve them. However, few of them digged into the requirements of the third-party developers to study this. In this work, we gain insight into this question from multidimensional perspectives of API developers and API users by a kind of cross-validation. We propose a hybrid approach, which combines manual inspection on artifacts and online survey on corresponding developers, to explore the different focus between these two types of stakeholders. In our work, we manually inspect 1000 posts and receive 319 questionnaires in total. Through the mutual verification of the inspection and survey process, we found that the users are more concerned with the usage of API, while the official documentation mainly provides functional description. Furthermore, we identified 9 flaws of the official documentation and summarized 12 aspects (from the content to the representation) for promotion to improve the official API documentations.

Key words: API documentation; Android; online survey; Stack Overflow

Cite this article as: FAN Qiang, WANG Tao, YANG Cheng, YIN Gang, YU Yue, WANG Huai-min. Why do they ask? An exploratory study of crowd discussions about android api in stack overflow [J]. Journal of Central South University, 2019, 26(9): 2432-2446. DOI: https://doi.org/10.1007/s11771-019-4185-5.

1 Introduction

Application programming interface (API) documentation plays an important role in software development and reuse [1] for both API maintainers and API users. Well-written documentation will help developers effectively understand and reuse code [2], and reduce their time to focus on desired interfaces and functions instead of the entire system [3]. Most of the high-quality open source projects maintain a complete and informative official documentation. For example, Android accompanies its functioned API with official documentations over more than 4.8 thousands of classes and 44 thousands of methods to facilitate the peripheral developers. The API documentation typically conveys detailed specifications such as class/interface hierarchies and method descriptions which can benefit developers much [4, 5].

However, despite of its authoritativeness and thoroughness, the single-sourced official documentations do not always meet the developers’ requirements [6]. The Quora question in Figure 1 shows that the official documentation is not friendly for amateurs. Meanwhile, many developers turn to other knowledge sharing communities [7], like Stack Overflow and Wikis, and seek help from the crowds. By January 2018, over 1 million questions about Android have been posted to Stack Overflow, and about 16% of them are related to API(by searching API and Android in Stack Overflow).

Figure 1 Question about Android documentation in Quara

Why the official documentation fails to solve the developers’ questions? UDDIN et al [8] analyzed how API documentation fails. Compared with official documentations, API users prefer crowd documentations in the consumption communities, which are more dynamic and interactive [6] and have more examples with better coverage [9]. Many studies [6, 10, 11] have worked on filling the gaps between two types of documentations. Completed and well performed documentation is good for helping developers to find what they want and improve development efficiency. Many studies have worked on how to improve the documentation [4, 10-13].

Nevertheless, most of these contributions are devoted to a specific aspects (i.e., integrating contextual help to IDEs, providing commonly used classes) and try to enhance the documentation to a limited extend. None of these works investigate the third-part developers’ actual requirements on documentation and provide a systematical solution on promoting them.

The reason why the well maintained official documentation fails to fulfill the developers’ requirements may be rooted in different perspectives of software manufactures and API users. The official documentation maintainers are mainly the API developers who are quite familiar with the details of the API and provide information mainly from their own view, while the API users may need more about the usage and soon.

In this work, we studied this question intensively with a multidimensional cross- validation from both the API developers and API users’ perspectives. We explore the crowd discussions about Android API in Stack Overflow, compare them with the official documentation and identify what questions had troubled the third-party developers and why they are confused even there are official documentations. In addition, we conducted an extensive online survey on these developers involved in the discussions to understand their trouble, which mutually verifies our discovery. Based on the comprehensive analysis, we provide a guideline about improving documentation from content to representation.

In summary, the key contributions of this paper include:

1) We propose a hybrid approach to investigate the actual requirements over API documentations from the third-party developers’ perspective. We summarized 6 types of questions about Android API, and the results of survey proved our discoveries.

2) We summarized 9 problems in the API documentation from inspection of Stack Overflow posts and online survey. The results showed that the lack of examples was the most important factor that caused questions for developers. Moreover, we identified other 5 main factors of the documentation which confused developers.

3) Based on the investigation, we provide a guideline on how to improve the documentation for third-party developers, including 5 kinds of content supplementary and 7 types of content representation.

The structure of this paper is organized as follows. In Section 2, we illustrate background and research questions of our research. In Section 3, we present the key methods of our study, including manual inspection and online survey. The results and discussion can be found in Section 4. In Section 5, we discuss threats about our work. And some related works are shown in Section 6. Finally, conclusions are drawn in Section 7.

2 Background and research questions

2.1 API documentation

API is a set of subroutine definitions, protocols, and tools for building application software. It abstracts the underlying implementation and exposes objects or actions the API user needs. However, most of APIs is so abstract and hard to understand for API users. Most of time, API users need more information to figure out how to properly use the API to implement the desired function. The API documentation is the main channel for information transmission from API developers to API users.

API documentation is a technical content deliverable, containing details about the functions, classes, return types and arguments, etc. Most of time, official API documentation is maintained by the core team or enthusiastic fans of the project, and a well flowed documentation is always well edited and with a good structure. Under ideal conditions, a good API documentation should provide concise and basic information about the API, which can put across how to effectively use and integrate with API to API users. However, the different perspectives between API developers and users make it a challenge. For example, API developers and API users share different keywords [14] to describe API, and different technical backgrounds make them require [15] different information about API. On the one hand, the difference, such as shared keywords and technical background, makes API users hard to use the API, which increases its costs. On the other hand, it also makes API developers hard to figure out API users requirements.

Facing the weak of API documentation, API users often access to Q&A websites like Stack Overflow to seek help from other developers. They post their question or problem they meet in Q&A websites, and other developers who have solved the problem will provide help to them and answer the post. As time goes on, these websites accumulate tremendous amount of discussion about API, which can be seen as a kind of crowd documentation [6, 10, 11]. Compared with official API documentation, the crowd documentation is more dynamic and interactive. API users can search in historic discussions to find solution, or post a new question to gain solution from other developers. The advantages of crowd documentation are that API users can find new solutions from other developers’ experience, and the question oriented way can let API users locate information more quickly.

The flexibility and question-oriented way make more and more API users access crowd documentation to find solution, but there are still some limitations. For instance, the answers from crowd developers are uneven, and many answers may be not well edited and proofread. So it is hard to guarantee correctness of information provided by crowds [16]. The process of filtering out the right answer is time consuming and upsetting. Besides, crowd developers need time to figure out the question and organize language answering it [17, 18]. Some questions may not get attention, and few developers will answer or response to it [19]. Factors like these would result in that API users cannot receive help in time. So a well edited API documentation plays an irreplaceable role in providing reliable information and swift inquiry.

To help API developers better implement document work, many researchers have worked on empirical study and automatic technique to improve API documentation. For example, some studies work on the influence of different type of information on developer, including constraint [20-22], software structure [23-27], etc. Other researchers study automatic summary technique to help automatically generate documents, including generating summaries for code snippets [28], methods/functions [29], classes [30] and multi-class [31].

2.2 Research question

In Section 2.1, we introduce the advantage and weakness of official documentation and crowd documentation. We suppose one of the main factors that limit official documentation is the different perspectives between API documentation maintainers and users. Developers do not know what users request, and the information they provide cannot always meet the needs of users. Users have to seek help from other sources to solve their questions.

Many researches have worked on improving documentation, such as study the influence of different information [21, 32, 33], and sum up a taxonomy of user activities about API [34]. However, few researches study the difference of requirement between API developers and users. In this paper, we want to figure out this difference, and explore the users’ requirements for API documentation, so we ask:

RQ1: What topics do users ask about API in Stack Overflow?

Furthermore, we want to figure out what questions have troubled the third-party developers and why they are confused with a well maintained documentation, so we ask:

RQ2: What is the weakness of API documentation? What were the factors that caused questions?

Finally, we want to give a guideline based on our analysis. Through this guideline, API developers and automatic documentation researchers will know what information do API users more need and in what form to organize the information.

RQ3: How to improve the documentation to meet the requirement of API users?

3 Approach

3.1 Overview

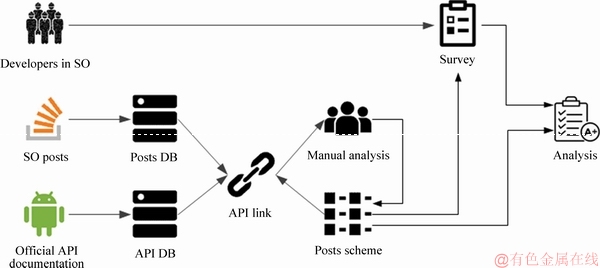

In this paper, we propose a hybrid approach to explore the requirements of the third-party developers about API documentation. The approach includes manual inspection and online survey, and the overview of our approach is shown in Figure 2.

Firstly, we link Android API and Android-related posts, which is used to filter out posts discussing Android API. The detail process will be introduced in Section 3.4. Secondly, we use manual inspection to categorize the topics and causes of questions based on the posts which we select in process of linking API. In addition, we identify information provided in official documentation, which is used to compare different perspectives of API developers and users. The process will be described in Section 3.2. Then, we use the results of manual inspection to design a research survey, and deliver to users in Stack Overflow, as presented in Section 3.3. Finally, we combine the results of the manual inspection and online survey to get the conclusions in Section 4.

3.2 Manual inspection

Google has provided a well edited API documentation to help Android developers. However, developers prefer to seek help in open source communities, e.g., Stack Overflow [6]. There have been millions of posts discussed by third-party Android developers just in Stack Overflow. Inspired by this, we manually inspect the posts about Android API to observe the motivations of developers who asked questions in Stack Overflow. In addition, we also inspect official Android API documentation to explore the requirement of API from API maintainers’ perspectives, which helps us to understand the gap between API maintainers and third-party developers.

1) Manual inspection of Stack Overflow Posts. We perform an iterative process like previous research [34] to identify and categorize why third-party Android developers ask questions in Stack Overflow. In the process, we manually inspect the Android-related posts and try to build a taxonomy scheme about developers’ requirements on information. This iterative process employs the following steps:

Preparation phase. We randomly select 100 posts associated with Android API, and inspect the questions and answers of the posts. We extract the main issue discussed in the questions and answers, which helps us determine how to classify them. Then we commit a summary message to curtly describe the issue for each selected post.

Figure 2 Overview process of hybrid approach

Execution phase. After preparation phase, we analyze the committed summary messages of posts and extract taxonomy of questions and answers. Through a rigorous analysis of existing literatures [6, 8, 35] and our own experience with working and analyzing, we identify 6 types of questions and 10 causes of questions, which are stabilized after iterative labeling.

Iterative phase. By referring to the recognition result in the preceding phase, 10 master students repeat the previous process from new selected questions to exam the stability of the taxonomy definition. Each selected post will be assigned to two students. Students will identify the category of posts based on taxonomy of questions and answers (as introduced in execution phase). If a new category is recognized, students reexamine the previously classified posts to determine whether the categorization changed. The results of posts which gain the same category from two students will be stored, and the other which gains different categories will be discussed until reaching an agreement. Finally, we use our stabilized taxonomy to identify 1000 Android-related questions.

2) Manual inspection of Android documentation. Google provides a structured API documentation as a web service. For each API method, they provide a brief functional description as well as the explanation of input parameters and return value. Sometimes, the documentation may give some notes for API methods, and link to other API methods with related/similar function. As shown in Figure 3, we select an example of API method to present the detail of documentation.

Functional description. A brief description of basic function the API method achieves. API users can gain a preliminary understanding of the API method through this information.

Note. The warn information that API users need to pay attention to when using the API method. It is helpful for API users to correctly use the API and avoid unnecessary errors.

I/O. The detail information about input and output of the API method.

Related/similar API. Links to other APIs that are related or similar to the API. Android provides a huge number of APIs to API users, which may confuse them. This information is helpful for them to recognize the right API.

In this process, we use an automatic method to recognize the information. We download all the web pages of Android API documentation and use Beautiful Soup [36] to extract the information from web pages by its web HTML tag. For example, the tag

3.3 Online survey

To figure out what questions had troubled the third-party developers with the help of well edited official documentation, we also publish a online survey to capture the voices and opinions of the third-party developers. Below, we illustrate how we design the survey and gather the feedbacks.

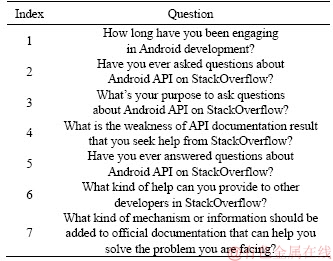

1) Survey design. In order to better collect responses of third-party developers, we designed 7 questions around our 3 research questions, as shown in Table 1. The survey includes multiple choice question and open-ended question. The answers of multiple choice question come from the process of manual inspection. For each question with fixed answers, we design a detective question to prevent malicious attacker(someone who casually answers the question), i.e., Questions 2 and 5 in Table 1. If they answer “no” to these questions, their answers to the following questions of the topic will be rejected. For example, before Question 3 collects opinions about why users ask questions in Stack Overflow, we ask the investigator whether he has asked the question in advance as Question 2. In addition, to further elicit their opinions, in all questions that have fixed answers, we include an optional “other” response. Finally, we design 2 questions with fixed answer for RQ1 (Questions 3 and 6), 1 question with fixed answer for RQ2 (Question 4), 1 open-ended question for RQ3 (Question 7), and 2 detective questions (Question 2 and 5). The detail design information of the questions will be present in Section 4.

Figure 3 An example of official Android API documentation

Table 1 Survey design

2) Target participants. We released the questionnaires on Survey Monkey [37], one of the most famous web services for online surveys, which was used in many researches [38, 39]. Survey Monkey provides many ways to collect responses of the survey, i.e., web link, email, and social media. Here, we send the survey to participants through email. We select two groups of participants as receivers: 1) the users who have ever asked questions about Android API usage; and 2) the users who have ever answered questions about Android API usage. The opinions from two groups of developers can help us get insight on our research questions. We include active developers in our surveys because they have more experience in communities and they are more likely to give useful responses.

3) Respondents: Within a period of 7 days, we have received overall 319 responses in total. 230 comments were received in 4 questions, even we did not require the participants to fill in. Additionally, many participants send E-mails to us and show their interest in our study.

3.4 Data collections and preprocessing

1) Data collections. Stack Overflow regularly publishes a data dump in Stack Exchange online [40]. The data dump includes all the posts in the XML format. Moreover, MSR provides a data dump as an SQL script in the data challenge [41], which contains information of developers like E-mails. In this study, we used the December 2016 Stack Overflow data dump [40] in manual inspection process and the August 2012MSR data dump [41] to get E-mails of developers for survey purpose. We select all the questions with Android-related tags from Stack Overflow. For each question, we extracted the same 6 pieces of information, i.e., title, description of the question, the list of its answers, the number of views, and the date when the question and the accepted answer posted. Finally, we collected 886920 questions and 1264807 answers about Android. Beside, we extracted developers’ information associated with Android posts. Developers’ information in Stack Overflow data dump does not contain E-mails, which is important for our online survey process. So we use login of developers to join users’ information in Stack Overflow data dump and MSR data dump, and obtain E-mails from MSR data dump.

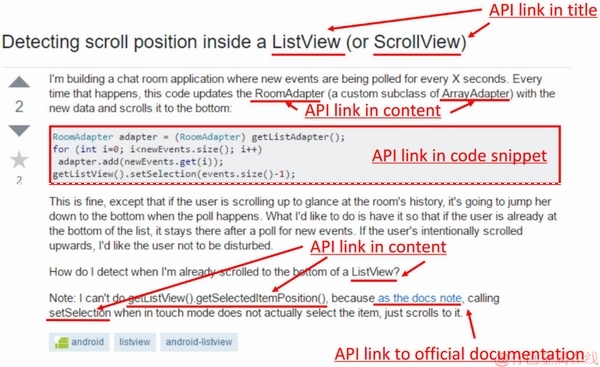

2) API link. To extract posts discussed about Android API, we used the process adopted from researches [42-44]. In our case, a link is a connection between a Stack Overflow question and an Android class (or method). In order to collect more discussions about Android API, we extract several types of links, as shown in Figure 4.

Word link. A match of a class name or method name occurring in the text field of the post, which is in the title, body or answers of the question after removing the content inside and HTML tags.

Code markup link. A match of a class name or method name occurring in the content inside HTML tags.

Figure 4 An example of a post with multiple links

Href markup link. A match of a class name or methodname occurring in the content inside HTMLtags. Most of time, the link points to the official Android API documentation.

For each type of links, we build a filter to detect API link contained in the text file. Because the names of Android API in some cases are common words, we need to detect link carefully to reduce the number of false positives. For Android API, camel case is used to define class name and method name, which can be used to determine whether the name is a single-word. Different types of link show different chance to be a false positive. For example, word link contains most interference information, so code markup link gets lower chance to be a false positive than word link. Therefore, different strategies are adopted for these links. A single word class (or method) name is more likely to appear as a common word. For single word classes and methods, we include in code markup link and href markup link, and we negatively look ahead to introduce more information in word link, e.g., android.app.Activity and AbsListView.draw. For href markup link, regex pattern is used to determine whether the hyperlink of the href links to the official documentation. Considering users often post complete ode blocks in their questions, we only filter code markup link embedded in the content of the body, and the code snippets are ignored in our method. By using our method, we finally filtered 54927 Stack Overflow questions, which link to at least one Android API class or method.

4 Results

4.1 RQ1: What topics do API users mainly discussed in Stack Overflows?

We use a hybrid approach, including manual inspection and online survey, to explore the user’s requirements about API documentation, including manual inspection and online survey, as described in Section 3.

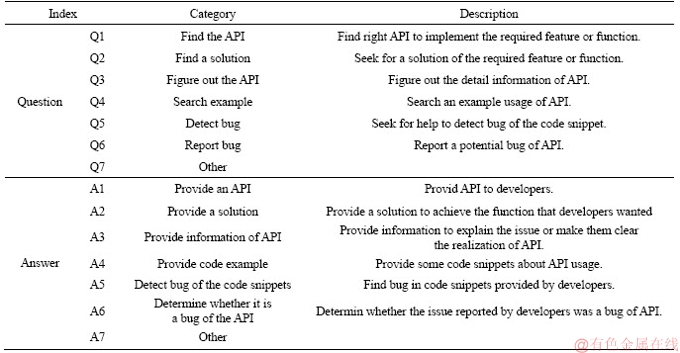

In manual inspection process, 10 master students handle total 1000 posts related to Android API from Stack Overflow, and each post is assigned to 2 students. Finally, they identify 6 main categories of questions, and 6 corresponding answers. The complete taxonomy schema of questions and answers is shown in Table 2, and for each category, we provide a short description to interpret. Also, we would like to point out that it is a common phenomenon that a single question and answer might belong to multiple categories.

In online survey process (as shown in Table 1), we use our taxonomy schema of manual inspection as option of question, and deliver the survey to third-part developers in Stack Overflow, who are active in questions about Android.

Table 2 Taxonomy scheme of posts in Stack Overflow

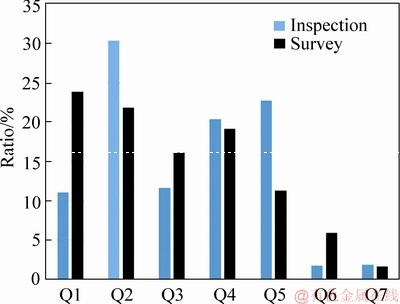

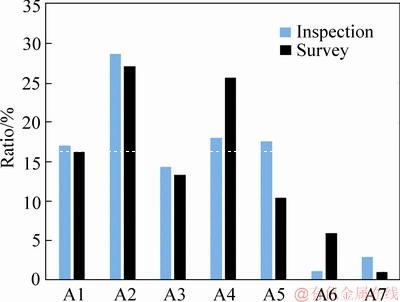

We normalize results of manual inspection and online survey to minimize bias in different measurement. For each category i in Table 2, we calculate the ratio of the posts as Eq. (1), and the ratio of the opinion from users as Eq. (2). In Eq. (1), Ci presents the category i,postj Ci presents posts identified as category i, and viewj presents pageviews of postj. In Eq. (2), votei presents the number of users vote the category i. The distribution of questions and answers are shown in Figures 5 and 6, where the x-axis is the index of the categories based on Table 2 and the y-axis is the ratio of each category.

Ci presents posts identified as category i, and viewj presents pageviews of postj. In Eq. (2), votei presents the number of users vote the category i. The distribution of questions and answers are shown in Figures 5 and 6, where the x-axis is the index of the categories based on Table 2 and the y-axis is the ratio of each category.

(1)

(1)

(2)

(2)

From Figures 5 and 6, we can see a similar distribution of manual inspection and online survey, which can provide a double verification of our conclusions. Figure 5 shows that finding a solution (Ind Q2) and searching example (Ind Q4) achieve a high ratio (nearly 20%) in both manual inspection and online survey. The ratio of Ind Q2 and Q4 is more than Q3 (Figure out the API), which suggests that API users are more concerned about how to use the API. Finding the API (Ind Q1) shows a higher ratio in survey than inspection, maybe because users can find answers from other questions.

Figure 5 Results of both survey and inspection about asking questions in Stack Overflow

Figure 6 Results of both survey and inspection about answering questions in Stack Overflow

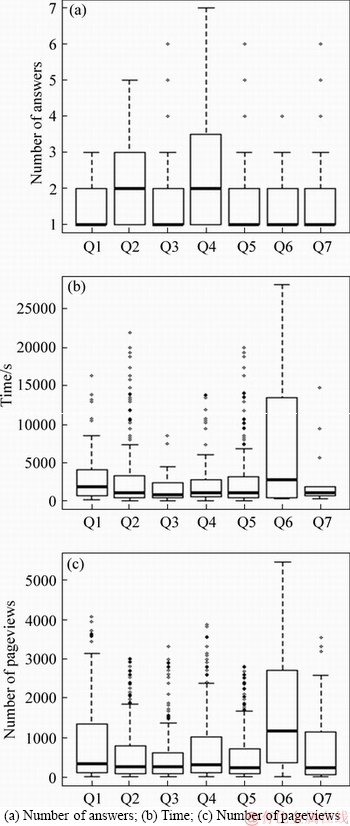

In addition, the data analysis results of manual inspection are shown in Figure 7, where the x-axis is the Ind of the categories based on Table 2.Figure 7(a) shows the number of answers that each category of questions received. The y-axis is the number of answers that the questions received. Figure 7(b) shows the time of solving the question, where the y-axis is time. Figure 7(c) shows the pageviews of the questions, where the y-axis is the number of views that the question acquired.

Finding a solution (Q2) and searching examples (Q4) needs more alternative answers. From Figure 7(a), Ind Q2 and Q4 are more than other category of questions. More answers may mean there are not confirmed answers to these questions, or more users in Stack Overflow can provide useful information. API users received many alternative answers and could select the best answer from them to solve the problem.

Reporting a bug (Q6) needs more time. From Figure 7(b), the time spent on Ind Q6 is more than other category of questions. For Ind Q6, determining whether it is a bug of API is difficult than other categories of question and needs more experience of development, which means fewer users can answer the questions. Moreover, solving this type of question may take more effort to make the judgment.

4.2 RQ2: Why do API users’ questions arise?

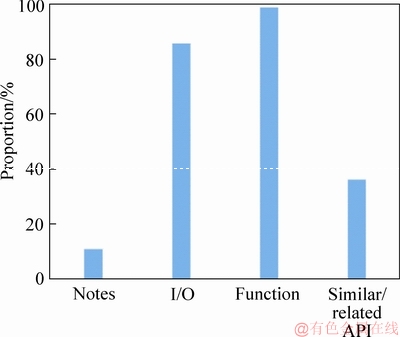

Through manual inspection of Android-related posts in Stack Overflow, we summarized a taxonomy scheme about the factors about why they seek help from Stack Overflow in the case of a well edited official documentation available. The scheme was used to data analyze and as fixed choices of survey like Section 4. Finally, we extract 9 main categories of the factors. Table 3 shows the complete taxonomy of factors. In particular, index W1 is only for survey purpose. For each factor, we provide a short description to interpret.

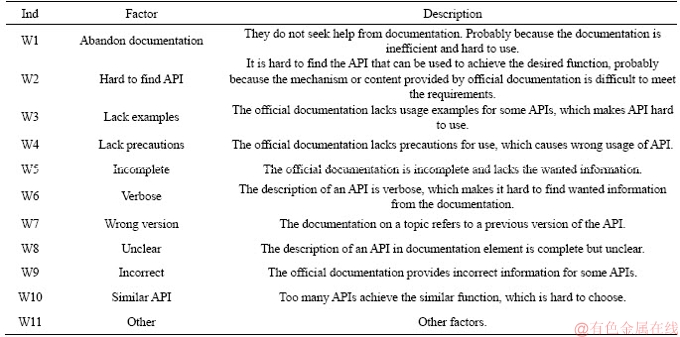

Figure 8 shows the distributions of API documentation problems from the result of manual inspection and online survey based on Table 3. The results of manual inspection and online survey show a similar distribution. Because index W1 is only for survey purpose, there is no data in the result of manual inspection.

Figure 7 Data analysis of manual inspection:

Nearly 10% API users never use documentation (W1). They believe that searching answers or asking questions in the Stack Overflow is more efficient than searching answers in the documentation. In addition, Stack Overflow is more friendly to locate what they want.

Lack of examples (W3) is the most important factor that caused many questions for the third-party developers. Nearly 50% of questions from manual inspection and 50% of third-party developers from survey show that lacking examples cause problems. Most of time, the official documentation provides very detailed explanation of the API about what the API is used for and means for each parameter. The information is useful for third-party developers to search the API, but lacking examples makes them have to search extra information to figure out how to use the API. Furthermore, lacking examples results in many wrong usage of the API, which makes programs crash and run error.

Table 3 Taxonomy scheme about shortcomings of API documentation

Figure 8 Results of both survey and inspection about shortcomings of API documentation

Searching API (W2) is one of the main requirements of API users. Nearly 20% of questions from manual inspection and 30% of third-party developers from survey show that searching API is important. In the result of Section 4, the number of pageviews about finding API is more than other types of questions, which also verifies our conclusions.

Lack of precautions (W4) causes API used wrong. Over 30% of questions and nearly 20% of third-part developers show that precautions are important. Precautions can prevent wrong usage of API. Lacking precautions costs API users much time to fix wrong usage of their program.

The content of the official documentation is far from complete (W5). Over 30% of the questions from manual inspection and over 30% of third-party developers from survey shows incomplete of the documentation. They cannot always find what they want from the documentation. They have to seek help from other developers to find information they want and better understand the API.

Locating wanted information in documentation is hard (W6), which disturbs the third-party developers. Over 30% of questions and nearly 30% of API users show the problem. More and more information is added in documentation with version iterations, which increases the cost of locating information and reduces the efficiency of development. Good searching mechanism and recommendation system are helpful for them to use documentation.

Sometimes the statement of documentation is hard to understand (W8). Nearly 20% of questions and 30% of third-party developers show the problem. The official documentation is edited by manufacturers with accurate and concise expression, which may be incomprehensible for them.

4.3 RQ3: How to improve official API documentation

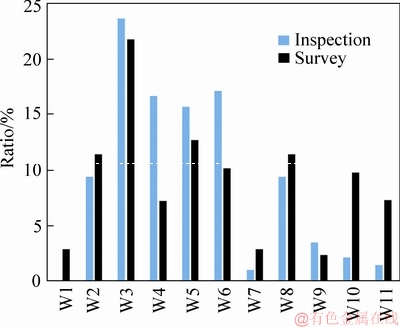

To figure out what the API developers mainly concern about, we summarize a taxonomy scheme of documentation by manual inspection as introduced in Section 3.2. The results are shown in Figure 9, which indicates the proportion of API having the following information (notes, I/O, functional description and similar/related API) in official documentation.

Figure 9 Distribution of API documentation

In Figure 9, we can see that the basic natural language functional description is the main information of the API documentation. It is helpful to make the API more comprehensible for API users about the function of the API. However, there is a gap between what the API is and how to use the API. API users still need to search more information or examples to reuse the API in their application, even though the documentation provides information like notes, I/O, and similar/ related API. The information is helpful to reduce problems arising in the development, but it is not enough. Lacking of examples costs API users much time to search and find a good practice of the API.

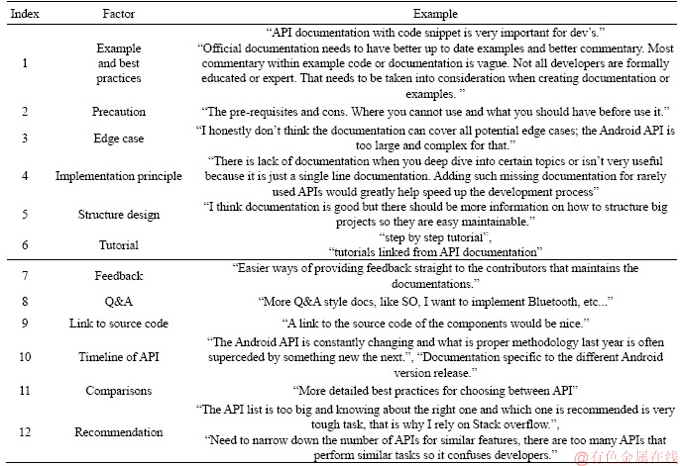

In our survey work, Question 7 in Table 1 aims to summarize some methods to improve the documentation. In the online survey, we totally receive 172 comments about how to improve the official documentation. Developers show great interest in this question. A large part of them describe the problems about documentation in detail and provide some improvement they want. In this section, we will show the result of survey from the content and the representation. Table 4 shows some examples of user feedbacks in our survey for each suggestion.

Table 4 Example of users’ feedback for improving documentation

1) Content

Examples and best practices. Examples are mentioned most in the comments. To some extent, providing more examples can solve most of questions about API. Moreover, it is better to provide best practice among the many implementations, which can save rating time.

Precautions. Precautions of the API are very useful to prevent wrong usage of API. It is also helpful for fixing bug of the program. However, most third-party developers concern about the precautions only when they have the bug in their program. So it is necessary to provide the precautions with a well performed recommendation system. In addition, the pre-requisites and cons are also important. It can let you know where you cannot use and what you should have before use it.

Edge cases. There are some edge cases which are not covered. The edge cases may be important for some special developers. Covering these API may cost much time, so give the authority to crowds will be better for the edge cases.

Implementation principles. Most of APIs do not contain information about why it works. When there are some issues due to principles of the API, the information of documentation can not solve the issue and it will cost much time to fix it. In addition, for API users who want more information about API, the documentation is unable to satisfy them. So, providing implementation principles makes API more easy to maintain with these content.

2) Representation

Tutorial. Most documentations are not friendly for amateur. For novice, they need to spend much time to get start. The documentation should provide some information which acts as step by step tutorial, which is easy to follow and help amateur get start faster.

Feedback. At present, the documentation is not interactive. Developers have to login other platform (i.e., Stack Overflow) to discuss the contents of documentation. Adding a function of feedback can receive more information from crowd, which is helpful to share knowledge.

Q&A. The statement of API contains many useful information. However, the information of API does not contain what questions it can solve, which makes it hard to locate what they want. Developers have to mine the documentation to find the information they want. Present information in Q&A form can link statements to the questions, which is helpful for searching answers.

Link to source code. For the API users who want to look into API deeply, providing a link to the source code of the components would be nice.

Timeline of API. It is common for API to change with version iterations. The API may not work when upgrading the system. A timeline of API changes with version iterations will be much helpful to fix the program.

Comparisons. In some cases, API users need to identify different implementations or APIs to pick up the better one. Many picking up processes have been achieved, whose result can be reused. Providing a comparison mechanism can save much time.

Recommendations. More and more information are added in documentation with version iterations, which increases the cost of locating information and reduces the efficiency of development. Third-party developers prefer the documentation showing the information they want to mine from the list of statement. So a well performed recommendation system is very necessary.

5 Threats to validity

This section discusses the threats to validity of our study following the guidelines for case study research [45].

Construct validity threats. In this paper, the construct validity threats are mainly from the subjectively judgment involved in identification and categorization of Android-related posts in manual inspection process. Many studies [44, 46, 47] have conducted manual inspection of API discussion. A well-designed workflow is used when we conduct manual inspection. In addition, we obey the guidelines and pay attention not to violate any guidelines to avoid the mistakes.

External validity threats. In this paper, we only discuss the API documentation of Android. Although Android is one of the most popular projects and many API studies choose Android as the target project [43, 44], further analysis is desired to claim that our findings are generalized well for other documentations. Moreover, the same question appears in the data collection of our manual inspection. Different developer population and other forums for programming besides Stack Overflow will be discussed in the future work.

Internal validity threats. The main threat to internal validity is that our study heavily relies on the guideline, and developers may misunderstand the choices of our survey. We extract choices from posts based on the existing literature and our experience, and for multiple choice questions we supply an additional “other” field which was used to uncover responses not considered and collect feedbacks of developers. Try our best effort to minimize the internal validity threats.

6 Related works

Several researches have contributed efforts to improve API documentation. STYLOS et al [13] provided Jadeite, which can give most commonly used classes to developers and identify the common ways to construct an instance of a given class.

DEKEL et al [4] introduced an Eclipse plug-in, eMoose, to decorate method invocations whose targets have associated directives. Moreover, they highlighted the tagged directives in the JavaDoc hover to lead readers to investigate further.

Other works have focused on understanding the difficulties encountered with unfamiliar APIs. ROBILLARD [48] and DELINE [49] identified the obstacles encountered when using a new API. They believed these obstacles are essentially associated to the API documentation. DUALA-EKOKO et al [35] identified 20 types of questions when working with unfamiliar APIs. Surveys and interviews were applied in these three studies.

Code snippets are also important for developers. Several researchers have focused on recommending code examples to API documentation. For example, KIM et al [50] mined code example summaries from the web, and proposed a recommendation system to return API documentation with summaries they mined. In a similar effort, CHEN et al [6] connected official documentation and crowd sourced API documentation, and integrated crowd sourced frequently asked questions into API documentations. Their work promoted knowledge collaboration of developers.

7 Conclusions and future work

API documentation plays an important role in the development. However, different perspectives between documentation manufacturers and API users confuse third-party developers. In this paper, we use manual inspection of Stack Overflow posts and online survey to figure out what kinds of questions developers ask about Android API and what flaws of documentation cause these questions. By analyzing the results, we summarize a guideline about how to improve the documentation. The results are as follows.

A hybrid approach is proposed to investigate the actual requirements of developers over API documentations. And we find API users are more concerned about how to apply the API than figure out what it achieves.

We summarize 9 factors of documentation that confuse and trouble developers. The results of manual inspection and online survey show similar conclusions.

We summarize a guideline about how to improve the documentation, which contains 5 kinds of content supplementary and 7 types of content representation.

In the future work, we plan to include more documentation in our analysis. Furthermore, we want to try some improvement works based on our results.

References

[1] GENTLEMAN R C, CAREY V J, BATES D M, BOLSTAD B, DETTLING M, DUDOIT S, ELLIS B, GAUTIER L, GE Y, GENTRY J, HORNIK K, HOTHORN T, HUBER W, IACUS S, IRIZARRY R, LEISCH F, LI C, MAECHLER M, ROSSINI A J, SAWITZKI G, SMITH C, SMYTH G, TIERNEY L, YANG J Y H, ZHANG J. Bioconductor: Open software development for computational biology and bioinformatics [J]. Genome Biology, 2004, 5(10): R80.

[2] FORWARD, LETHBRIDGE T C. The relevance of software documentation, tools and technologies: A survey [C]// Proceedings of the 2002 ACM Symposium on Document Engineering. ACM, 2002: 26–33.

[3] ROEHM T, TIARKS R, KOSCHKE R, MAALEJ W. How do professional developers comprehend software? [C]// Proceedings of the 34th International Conference on Software Engineering. IEEE, 2012: 255-265.

[4] DEKEL U, HERBSLEB J D. Improving API documentation usability with knowledge pushing [C]// Proceedings of the 31st International Conference on Software Engineering. IEEE Computer Society, 2009: 320-330.

[5] ZHONG H, ZHANG L, XIE T, MEI H. Inferring resource specifications from natural language API documentation [C]// 24th IEEE/ACM International Conference IEEE, 2009: 307-318.

[6] CHEN C, ZHANG K. Who asked what: Integrating crowd sourced FAQs into API documentation [C]// Proceedings of the 36th International Conference on Software Engineering. ACM, 2014: 456-459.

[7] YIN G, WANG T, WANG H, FAN Q, ZHANG Y, YU Y, YANG C. OSSEAN: Mining crowd wisdom in open source communities [C]// Service-Oriented System Engineering (SOSE). IEEE, 2015: 367-371.

[8] UDDIN G, ROBILLARD M P. How API documentation fails [J]. IEEE Software, 2015, 32(4): 68-75.

[9] API documentation[EB/OL]. [2017-6-4]. http://blog.ninlabs. com/2013/03/api-documentation/.

[10] BRANDT J, DONTCHEVA M, WESKAMP M, KLEMMER S R. Example centric programming: Integrating web search into the development environment [C]// Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM, 2010: 513–522.

[11] HARTMANN B, DHILLON M, CHAN M K. Hypersource: Bridging the gap between source and code-related web sites [C]// Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM, 2011: 2207-2210.

[12] STYLOS J, FAULRING A, YANG Z, MYERS B A. Improving API documentation using API usage information [C]// Visual Languages and Human-Centric Computing IEEE Symposium on. IEEE, 2009: 119-126.

[13] STYLOS J, MYERS B A, YANG Z. Improving API documentation using usage information [C]// CHI’09 Extended Abstracts on Human Factors in Computing Systems. ACM, 2009: 4429-4434.

[14] MCBURNEY P W, MCMILLAN C. An empirical study of the textual similarity between source code and source code summaries [J]. Empirical Software Engineering, 2016, 21(1): 17-42.

[15] JIANG J, YANG Y, HE J, BLANC X, ZHANG L. Who should comment on this pull request? Analyzing attributes for more accurate commenter recommendation in pull-based development [J]. Information and Software Technology, 2017, 84: 48-62.

[16] YANG D, HUSSAIN A, LOPES C V. From query to usable code: An analysis of stack overflow code snippets [C]// Proceedings of the 13th International Conference on Mining Software Repositories. ACM, 2016: 391-402.

[17] YANG C, ZHANG X, ZENG L, FAN Q, WANG T, YU Y, YIN G, WANG H. RevRec: A two-layer reviewer recommendation algorithm in pull-based development model [J]. Journal of Central South University, 2018, 25(5): 1129-1143.

[18] XUAN Q, ZHANG Z Y, FU C, HU H X, FILKOV V. Social synchrony on complex networks [J]. IEEE Transactions on Cybernetics, 2018, 48(5): 1420-1431.

[19] BOSU A, CORLEY C S, HEATON D, CHATTERJI D, CARVER J C, KRAFT N A. Building reputation in StackOverFlow: An empirical investigation [C]// Proceedings of the 10th Working Conference on Mining Software Repositories. IEEE Press, 2013: 89-92.

[20] KRKA I, BRUN Y, MEDVIDOVIC N. Automatic mining of specifications from invocation traces and method invariants [C]// Proceedings of the 22nd ACM SIGSOFT International Symposium on Foundations of Software Engineering. ACM, 2014: 178-189.

[21] MONPERRUS M, EICHBERG M, TEKES E, MEZINI M. What should developers be aware of an empirical study on the directives of API documentation [J]. Empirical Software Engineering, 2012, 17(6): 703-737.

[22] SAIED M A, SAHRAOUI H, DUFOUR B. An observational study on API usage constraints and their documentation [C]// Software Analysis, Evolution and Reengineering (SANER), 2015 IEEE 22nd International Conference IEEE, 2015: 33-42.

[23] CHE M. An approach to documenting and evolving architectural design decisions [C]// Software Engineering (ICSE), 2013, 35th International Conference IEEE, 2013: 1373-1376.

[24] SHAHIN M, LIANG P, LI Z. Do architectural design decisions improve the understanding of software architecture? two controlled experiments [C]// Proceedings of the 22nd International Conference on Program Comprehension. ACM, 2014: 3-13.

[25] ZAPALOWSKI V, NUNES I, NUNES D J. Revealing the relationship between architectural elements and source code characteristics [C]// Proceedings of the 22nd International Conference on Program Comprehension. ACM, 2014: 14-25.

[26] KIRCHMAYR W, MOSER M, NOCKE L, PICHLER J, TOBER R. Integration of static and dynamic code analysis for understanding legacy source code [C]// Software Maintenance and Evolution (ICSME), 2016 IEEE International Conference IEEE. 2016: 543-552.

[27] HEIJSTEK W, KUHNE T, CHAUDRON M R. Experimental analysis of textual and graphical representations for software architecture design [C]// Empirical Software Engineering and Measurement (ESEM), 2011 International Symposium IEEE. 2011: 167-176.

[28] ALLAMANIS M, BARR E T, BIRD C, SUTTON C. Suggesting accurate method and class names [C]// Proceedings of the 2015 10th Joint Meeting on Foundations of Software Engineering. ACM, 2015: 38-49.

[29] ABID N J, DRAGAN N, COLLARD M L, MALETIC J I, Using stereotypes in the automatic generation of natural language summaries for C++ methods [C]// Software Maintenance and Evolution (ICSME), 2015 IEEE International Conference IEEE. 2015: 561-565.

[30] MORENO L, APONTE J, SRIDHARA G, MARCUS A, POLLOCK L, VIJAYSHANKER K. Automatic generation of natural language summaries for java classes [J]. Program Comprehension (ICPC), 2013 IEEE 21st International Conference IEEE. 2013: 23-32.

[31] CORTES-COY L F, LINARES-V  SQUEZ M, APONTE J, POSHYVANYK D. On automatically generating commit messages via summarization of source code changes [C]// Source Code Analysis and Manipulation (SCAM), 2014 IEEE 14th International Working Conference IEEE. 2014: 275-284.

SQUEZ M, APONTE J, POSHYVANYK D. On automatically generating commit messages via summarization of source code changes [C]// Source Code Analysis and Manipulation (SCAM), 2014 IEEE 14th International Working Conference IEEE. 2014: 275-284.

[32] NASEHI S M, SILLITO J, MAURER F, BURNS C. What makes a good code example? A study of programming Q&A in StackOverFlow [C]// In Software Maintenance (ICSM), 2012 28th IEEE International Conference IEEE. 2012: 25-34.

[33] CHATTERJEE P, NISHI M A, DAMEVSKI K, AUGUSTINE V, POLLOCK L, KRAFT N A. What information about code snippets is available in different software-related documents? an exploratory study [C]// Software Analysis, Evolution and Reengineering (SANER), 2017 IEEE 24th International Conference IEEE. 2017: 382-386.

[34] ABDALKAREEM R, SHIHAB E, RILLING J. What do developers use the crowd for? A study using StackOverflow [J]. IEEE Software, 2017, 34(2): 53-60.

[35] DUALA-EKOKO E, ROBILLARD M P. Asking and answering questions about unfamiliar APIs: An exploratory study [C]// Proceedings of the 34th International Conference on Software Engineering. IEEE, 2012: 266-276.

[36] RICHARDSON L. Beautiful soup [EB/OL]. https://www. crummy.com/software, 2017.

[37] SurveyMonkey: The world's most popular free online survey tool [EB/OL]. [2017-6-4]. http://surveymonkey.com/.

[38] MA W, CHEN L, ZHANG X, ZHOU Y, XU B. How do developers fix cross-project correlated bugs? A case study on the GitHub scientific python ecosystem [C]// Proceedings of the 39th International Conference on Software Engineering. IEEE, 2017: 381-392.

[39] BIRNHOLTZ J, MEROLA N A R, PAUL A. Is it weird to still be a virgin: Anonymous, locally targeted questions on facebook confession boards [C]// Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems. ACM, 2015: 2613-2622.

[40] Stack exchange data dump [EB/OL]. https://archive.org/ details/stackexchange.

[41] BACCHELLI A. Mining challenge 2013: Stack overflow [C]// The 10th Working Conference on Mining Software Repositories. 2013.

[42] PARNIN C, TREUDE C, GRAMMEL L, STOREY M A. Crowd documentation: Exploring the coverage and the dynamics of API discussions on stack overflow [R]. Atlanta, USA: Georgia Institute of Technology, 2012.

[43] KAVALER D, POSNETT D, GIBLER C, CHEN H, DEVANBU P T, FILKOV V. Using and asking: APIs used in the android market and asked about in StackOverFlow [C]// International Conference on Social Informatics. Springer, Cham, 2013: 405-418.

[44] LINARES-VASQUEZ M, BAVOTA G, di PENTA M, OLIVETO R, POSHY- ANYK D. How do API changes trigger StackOverflow discussions? A study on the Android SDK [C]// Proceedings of the 22nd International Conference on Program Comprehension. ACM, 2014: 83-94.

ANYK D. How do API changes trigger StackOverflow discussions? A study on the Android SDK [C]// Proceedings of the 22nd International Conference on Program Comprehension. ACM, 2014: 83-94.

[45] YIN R K. Case study research design and methods third edition [M]// Applied Social Research Methods Series. Sage Publications, 1989.

[46] DIG D, JOHNSON R. How do APIs evolve? A story of refactoring [J]. Journal of Software: Evolution and Process, 2006, 18(2): 83-107.

[47] LI J, XIONG Y, LIU X, ZHANG L. How does web service API evolution affect clients? [C]// Web Services (ICWS), 2013 IEEE 20th International Conference. IEEE, 2013: 300-307.

[48] ROBILLARD P. What makes APIs hard to learn? Answers from developers [J]. IEEE Software, 2009, 26(6): 27-34.

[49] ROBILLARD P, DELINE R. A field study of API learning obstacles [J]. Empirical Software Engineering, 2011, 16(6): 703-732.

[50] KIM J, LEE S, HWANG S W, KIM S. Enriching documents with examples: A corpus mining approach [J]. ACM Transactions on Information Systems (TOIS), 2013, 31(1): 1-27.

(Edited by FANG Jing-hua)

中文导读

在Stack Overflow平台上关于安卓应用程序接口群体讨论的探索性研究

摘要:如今尽管安卓的官方文档内容越来越丰富,但是越来愈多的安卓开发人员更愿意在Stack Overflow等问答社区中寻求帮助,而不是在安卓官方文档中寻找答案。对此,一些研究者对官方文档的局限性进行研究,并提出改进方法,但是他们很少有从第三方开发者的需求角度对这个问题进行研究。本文采用一种交叉验证的方法从API(应用程序接口)文档维护人员和第三方用户的多维视角研究这个问题,提出了一种结合人工检测和调查问卷的混合方法来探索不同利益相关者关注点的不同。在人工检测过程中我们对1000个Stack Overflow中的帖子进行分析;在线调查问卷过程中共收到了319份问卷结果。通过人工检测和调查问卷的相互验证,我们发现第三方用户更关心如何使用API,但是官方文档更多的对API的功能进行描述,缺少如何使用API的示例。此外,我们发现了官方文档的9个缺陷,并列举了12个可以提高API文档的方式。

关键词:应用程序接口文档;安卓;在线调查;Stack Overflow

Foundation item: Project(2018-YFB1004202) supported by the National Key R&D Program of China; Project(61702534) supported by the National Natural Science Foundation of China

Received date: 2018-07-02; Accepted date: 2019-01-17

Corresponding author: WANG Tao, PhD, Assistant Professor; Tel: +86-13975850702; E-mail: wangtao2005@nudt.edu.cn; ORCID: 0000-0001-8681-3026